From Wakapon

This page is a rough summary of the various methods for optimization, curve fitting/linear regression, etc.

First, some definitions:

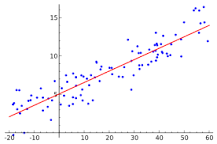

- In statistics, linear regression is basically a way to make a curve fit a set of data points

Contents

[hide]Optimization

- Convex optimization

One-Dimensional Search Methods

- Golden Section Search 91

- Fibonacci Search 95

- Newton's Method 103

- Secant Method

Unconstrained Optimization and Neural Networks

- Descent methods

- Line search

- Descent methods with trust region

- Steepest descent

- Quadratic models

- Conjugate gradient methods

- Single-Neuron Training

- Backpropagation Algorithm

Newton-Type Methods

- Newton’s method

- Damped Newton methods

- Quasi–Newton methods

- DFP formula

- BFGS formulas

- Quasi–Newton implementation

Direct Search

- Simplex method

- Method of Hooke and Jeeves

Linear Data Fitting

- “Best” fit

- Linear least squares

- Weighted least squares

- Generalized least squares

- Polynomial fit

- Spline fit

- Choice of knots

Nonlinear Least Squares Problems

- Gauss–Newton method

- The Levenberg–Marquardt method

- Powell’s Dog Leg Method

- Secant version of the L–M method

- Secant version of the Dog Leg method

Duality

- The Lagrange dual function

- The Lagrange dual problem